Ensemble Learning

What is Ensemble Learning

The Hundred-Page Machine Learning Book

Ensemble learning is a learning paradigm that, instead of trying to learn one super-accurate model, focuses on training a large number of low-accuracy models and then combining the predictions given by those weak models to obtain a high-accuracy meta-model.

- It is a machine learning technique that combines multiple models to improve the overall performance of the system.

- It can be computationally expensive and may require more resources than a single model.

Types

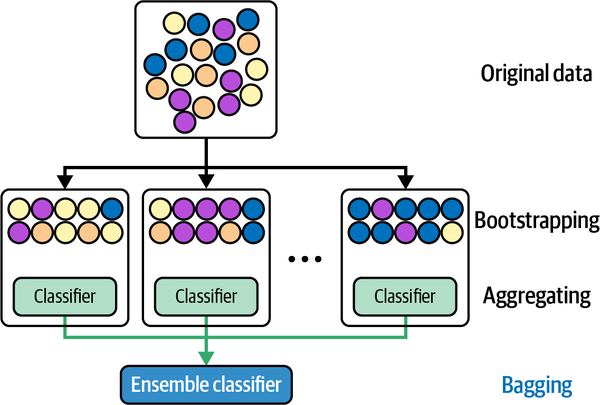

Bagging (Bootstrap Aggregating)

- How it works

- Bagging is an ensemble learning method in which multiple models are trained independently on random subsets of the training data.

- The predictions of these models are combined using a simple majority vote (for classification) or an average (for regression) to make the final prediction.

- Bagging can improve the accuracy and stability of the model, especially when the individual models are prone to Underfitting vs. Overfitting#Overfitting.

- Examples

- Decision Tree & Random Forest#Random Forest can be seen as a bagging method for decision trees

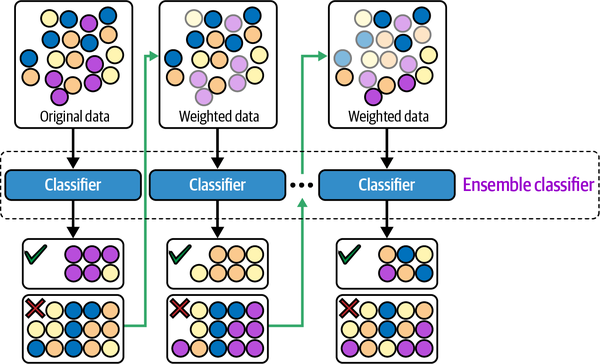

Boosting

- How it works

- Boosting is an ensemble learning method in which models are trained sequentially, with each new model attempting to correct the errors of the previous models.

- The final prediction is made by combining the predictions of all the models, weighted by their individual performance.

- Boosting can be effective in reducing the bias of the model (i.e. solving Underfitting vs. Overfitting#Underfitting), especially when the individual models are simple and have high bias.

- Examples

- AdaBoost (Adaptive boosting)

- Gradient Boosting

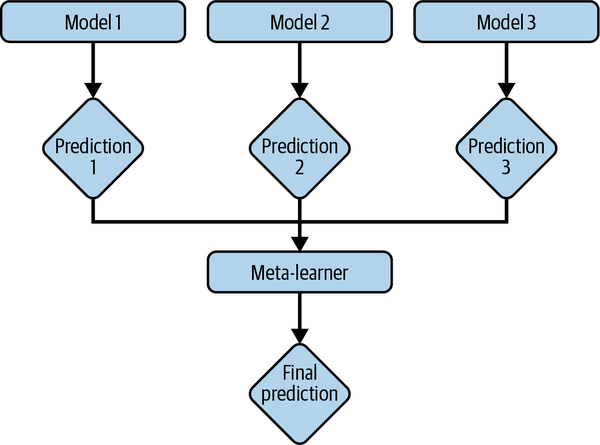

Stacking

- How it works

- train base learners from the training data then create a meta-learner that combines the outputs of the base learners to output final predictions

- take a majority vote (for classification) or an average (for regression) from all base learners to make the final prediction.